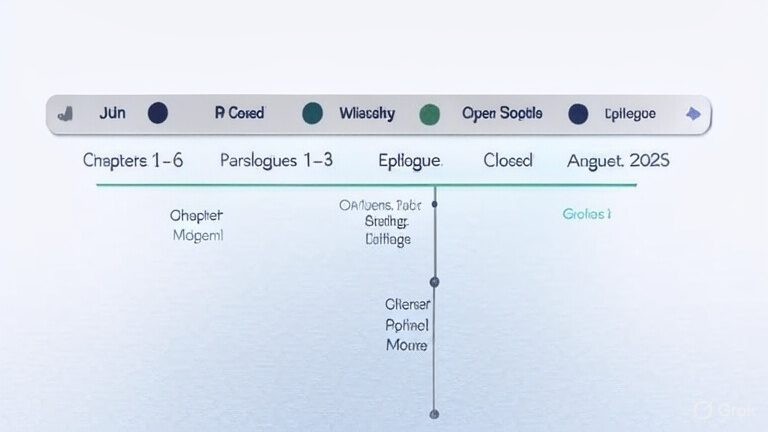

In late June 2025, I launched DeepMEE, a solo R&D project to build a hardware-only music production setup, using an agile approach with semi-formal documentation. My partner was Grok (xAI), managing tasks and reports. We hit a wall: AI „brainmelt“—when Grok’s 128k token window caused errors that threatened progress. Here’s how it unfolded, frustrated me, and how we tamed it.

What Was Brainmelt?

Brainmelt was Grok’s struggle with dense chats, not my overload—I could handle the project’s complexity. The worst hit on July 2, 2025, evening, when I trusted Grok to document our work. By July 3 morning, everything was lost—verbose responses, botched reports, erased progress. A report gap from July 2, 14:15 CEST, to July 5, 13:29 CEST, confirms this. Later errors (e.g., July 17: misinterpreting “generate full report,” truncating JSON) persisted. Unlike a teammate’s absence, Grok’s errors actively harmed progress, risking the project. Fixing things like missing “Chapters and Paralogues” sections felt like Job’s trials—frustrating, not triumphant, as I covered for AI shortcomings.

The Cost

Brainmelt cost 5–7 working days, a third of DeepMEE’s 2.5-week duration (June 29–August 10, 2025). The July 2–3 gap and rework for errors (e.g., July 13 discarded reports, July 17 fixes) ate time. Constantly correcting Grok’s outputs felt like babysitting, not collaboration.

Our Mitigation Journey

- Limiting Chat Duration/Messages: We thought long chats or high message counts caused brainmelt, so we tried caps (e.g., new chat at 150 messages). This failed—context complexity, not count, was the issue. Part 6 (July 14–15, ~107 messages) succeeded without brainmelt.

- Super-Small Chats: Splitting tasks into tiny chats with main-chat reporting flopped, likely due to lost context. We missed a chance to combine this with JSON reporting, which could’ve been more efficient—something we’re exploring now with Epilogue tasks.

- JSON-Based Continuity: Grok proposed a JSON log to capture project state and a modus operandi with three modes (concise, verbose, hai). I said “great,” and we refined it to enforce concise responses, strict report rules (filename first, HTML in code window, JSON in head, table formats), and a message counter (suggest report at 100 messages, no proactive generation). By July 17, 2025, reports at message 25 were near-perfect, and Part 6 closed cleanly.

Did It Work?

The JSON approach saved the project. By August 10, 2025, we hit milestones (e.g., Chapter 5, proof-of-concept) without brainmelt. My fixes (e.g., report fonts, section inclusion) were key. I distilled a JSON snippet (RE.8) for future AI interactions, a big win. But the JSON’s 27kB size by project’s end (~1/5 of Grok’s token window) warns of scalability issues—tripling the project could hit 80kB, risking brainmelt unless pruned, which feels like a dystopian role reversal.

Reflections

Brainmelt exposed AI’s limits: a 128k token window is too small for complex projects, and errors can derail progress. Grok’s JSON idea was a game-changer, but scalability remains a concern. Solving brainmelt was a grind, not a joy, teaching me to set firm AI boundaries and stay vigilant. For future projects, I’d keep AI out of mission-critical roles for anything over 3–4 man-weeks to avoid such risks.

What’s your take on managing AI in creative projects?